Origami Sensei

What key design principles should be considered for optimizing creative task learning through the utilisation of mixed reality tools?

My Role Design Researcher, UI Desginer

Team Qiyu Chen, Richa Mishra, Prof Dina ElZanfaly, Kris Kitani

Context Design Research Assistantship at Embodied Computation Lab + Extended Reality Lab @ CMU

Overview

Traditional learning methods for hands-on creative tasks, like origami, lack real-time feedback, hindering beginners' progress as learners are not able to track their progress and identifying areas for improvement. Origami Sensei project addresses this gap by leveraging modern computer vision and mixed reality technologies.

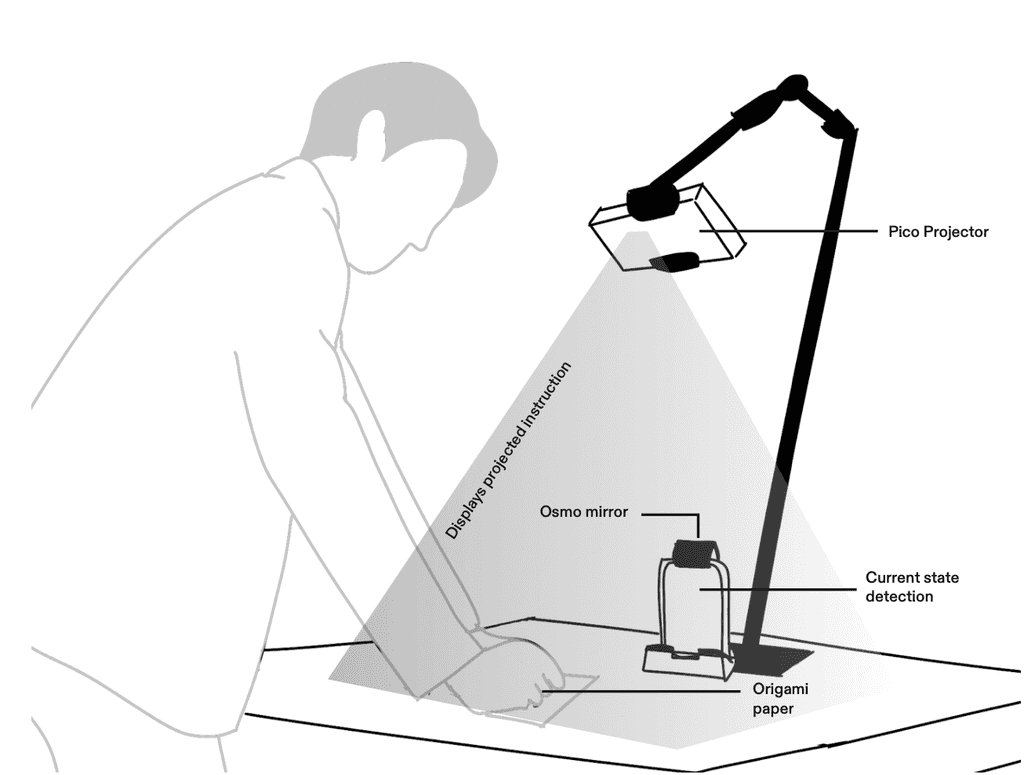

Origami Sensei empowers users to craft their own origami pieces from a collection of pre-existing designs. Leveraging advanced computer vision algorithms, the system recognizes the user's current origami step and delivers instant visual projections and verbal feedback to aid in successfully executing the subsequent steps.

In this project I lead the exploratory design process to understand the design principle of creating learning tools using mixed reality.

EXPLORATIVE RESEARCH

What are the design principles in designing creative task learning tools in mixed reality environment?

I became a part of this project in August 2023, joining a team that had already laid the foundation for Origami Sensei. At that point, the team had successfully developed an early prototype featuring computer vision model. In this context, I then helped conduct series of research to determine appropriate concept

Ergonomics Literature Review

Before delving into the user's perspective, I decided to explore how principles from an ergonomic standpoint could enhance user experiences and interactions with Origami Sensei. However, finding existing design principles in the literature proved challenging. Despite spending hours reading papers, it appears that, given the tool's novelty, comprehensive design principles references are not widely available yet.

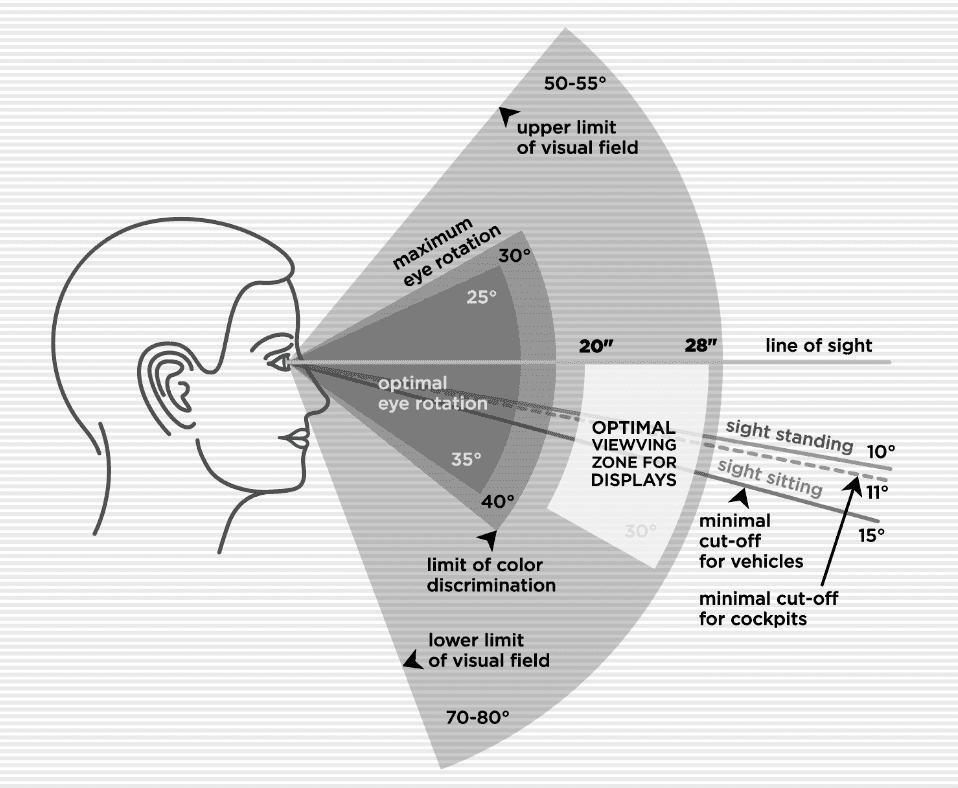

I shifted focus to understanding the ergonomics of the average person in relation to computer screens. This allowed me to grasp common-sense visibility and movement principles and affordances.

Optimal Viewing Zone

The optimal viewing zone for screens and technology interfaces refers to the ideal distance for comfortable and immersive viewing. Research suggests that the optimal zone falls between 20 and 28 inches away from the eyes.

Additionally, the angle at which your eyes interact with the interface matters. Ideally, your eye angle rotation should be no more than 30 degrees to avoid neck strain and fatigue.

Optimal Body Position

The optimal body position zone for screens and technology interfaces refers to the ideal distance for comfortable movement. The only thing research suggests that the optimal zone is that the screen / tools to be positioned within the limb length.

Additionally, we need to be mindful differences in people’s height because it would also affect their optimal viewing zone..

Exploratory Making

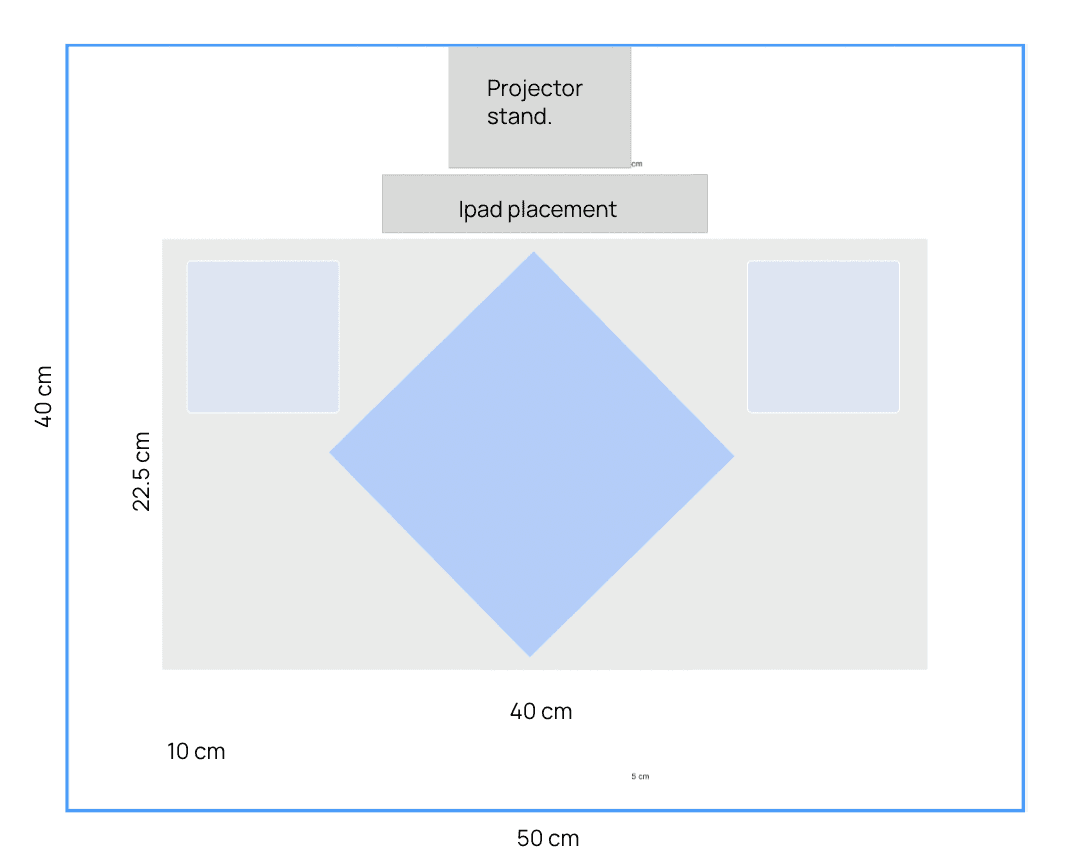

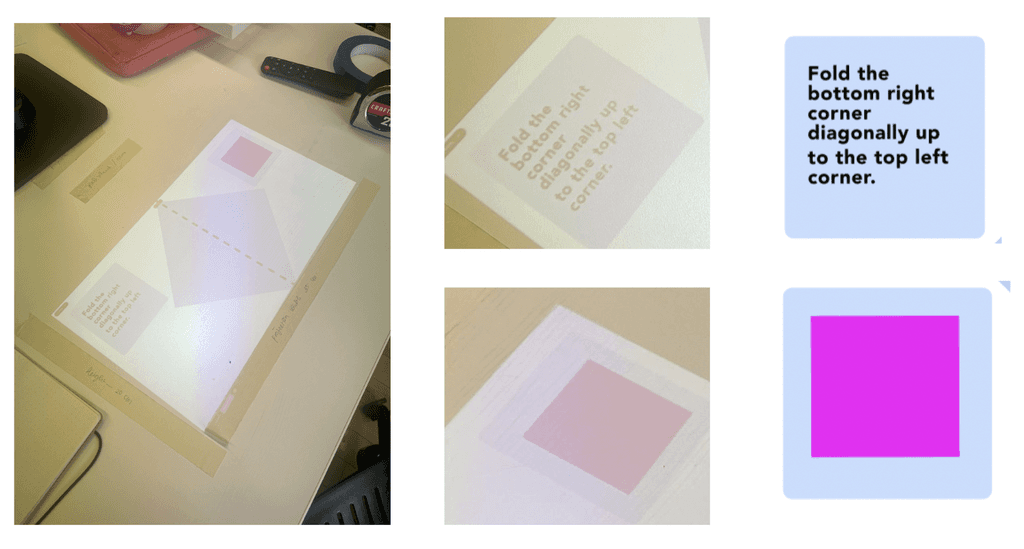

Having established various measurements based on an ergonomic model, I proceeded to experiment with diverse physical setups for the mixed reality environment. Given the continuous demand of the computer vision model to detect origami movements, I opted for a table setup and I also decided to test out UI component.

Projection Visual Principles

Readability

Projection’s visual component (text, graphic, line) should be clearly readable by user.

Maintain a minimum paragraph spacing of 10px and utilize letter spacing. Choose Sans Serif fonts for clarity and reduced eye strain on projection.

Legibility

Ensure a contrast between text/line and background colors for increased visibility. Avoid subtle color combinations that may not display well on projectors.

Place text, lines, and graphics where they won't be covered by hand movements for clear visibility.

Intuitive Reading

The instruction area is placed on the left, and the next step is on the right side to facilitate more intuitive reading (from left to right: reading the instruction first, then seeing what they're going to make).

Instruction Content Principle

Nudge for Progression

Instruction should include progress bar / step number to help user know where they are in the journey and nudge them to proceed with the process.

Easy to Understand

Language used in the instruction content should be clear and concise. All signs, lines should also be introduced to the users before.

Easy Error Recovery

The instructions should help users recognize when they've made a mistake and provide them with ways to recover.

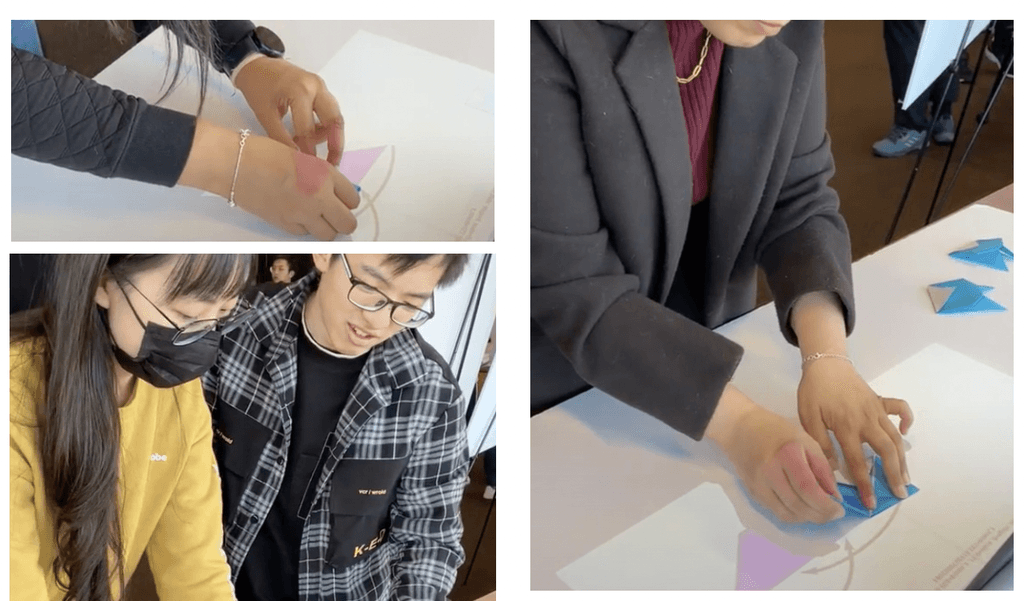

Rapid User Testing

The rapid UI testing part was conducted with 8 volunteers to get insights on some UI component of the instruction (projection); How do users think of the current UI? What do they think particularly challenging to follow, intrusive?

How do users perceive the current UI elements such as the instruction text, animated GIF, and steps number?

Can users identify specific UI elements that they believe could be improved? What feedback do they provide regarding these elements?

What are users' opinions on the learning flow and error state within the current user interface?

Key Insights

Clearer differentiation between error and success state

Currently, users can’t immediately see that they’re making error because the visual feedback is not clear in terms of colour.

Animated GIF edges and colors could be enhanced to help users in folding.

Colour clarity of the GIF edges could be enhanced because currently the gradient confuses users on which side to fold.

Paper projection does not match the real paper size thus making confusion.

Users highlight the needs to have the paper projection matched with the real paper so that the folding lines match.

FINAL DESIGN CONCEPT

Origami Sensei XR Learning

Planar Origami Model

Using a planar origami design, simplifying the folidng steps for clarity, and integrate instructional figures and GIFs for the design.

iOS Application

The iOS app seamlessly integrates task selection and manual processing or backing, while also enabling real-time detection capabilities.

Projection Interface

For a dynamic projection setup, a Flask backend is used and paired with a Jinja frontend, incorporating an SSE (Server-Sent Events) API. This projections showcases instructions through a combination of textual descriptions, image overlays, animations, and sound elements to enhance the user experience.

Camera and Mirror

The system utilizes a tablet camera in conjunction with a mirror. This integrated setup enables the system to capture and analyze the user’s actions, allowing it to provide real-time feedback and information to guide the user through the next steps in the folding process.

Table Setup

Considering reachability by ensuring an elbow-to-finger range of 35 to 50 centimeters, maintain visibility within a readability range of less than 60 centimeters, and provide ample movability with a hand movement space of at least 40 centimeters in width.

UI Design for Projection

Lia Purnamasari / 2024

Pittsburgh, Pennsylvania